The exorbitant cost of GPU-accelerated systems for training and inference and latest to rush to find gold in mountains of corporate data are combining to exert tectonic forces on the datacenter landscape and push up a new Himalaya range – with Nvidia as its steepest and highest peak.

It is a thing to behold. And perhaps to fear. But progress is our most important product, and so the world will throw its economic weight behind generative AI and we will have to see how this all plays out. In a way, it is comforting to know that when the world believes in something, it can turn on a dime and spend a few hundred billion dollars without hesitation.

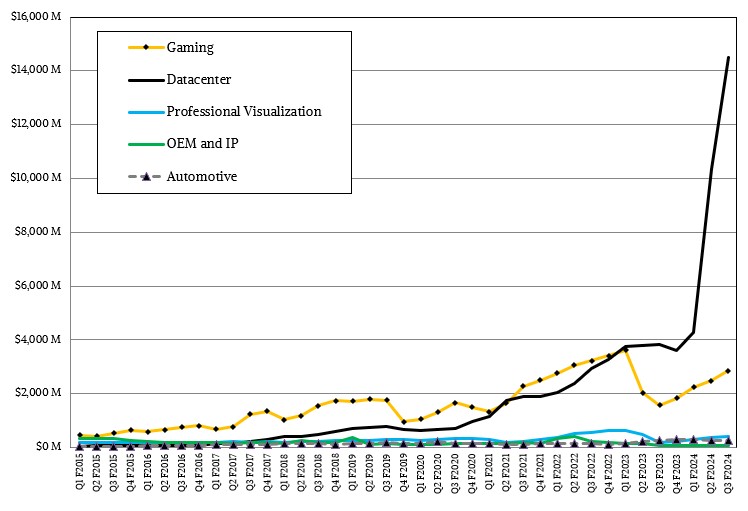

Just look at this chart:

Good luck extrapolating from that curve. What seems clear is that Nvidia has been able to expand the availability of its “Hopper” H100 GPU accelerators quite significantly in the past three quarters and that it has been able to maintain and perhaps even increase the price it can get for these devices. That’s no surprise when Nvidia can make on the order of maybe 500,000 of these GPUs this year in a world that wants millions.

In the quarter ended in October, Nvidia’s revenues more than tripled to $18.12 billion and its net income grew by a factor of 13.6X year on year to hit a stunning $9.24 billion. Yup, for every dollar that Nvidia is making these days, 51 cents is going to the bottom line as net income – not the middle line of operating income, mind you. Nvidia has the IT market right where it wants it.

And it won’t last because an unnatural condition like this – and especially like this – cannot last. Economic substitution and competitive pressures will come, prices will fall, volumes will increase, and it will all settle out with Nvidia having the lion’s share of a very profitable accelerated computing continent. Nvidia cannot and will not have this kind of market share ever again in its presumed long economic life. But, damn, Nvidia can hold onto it for a while and it is an amazing thing to see.

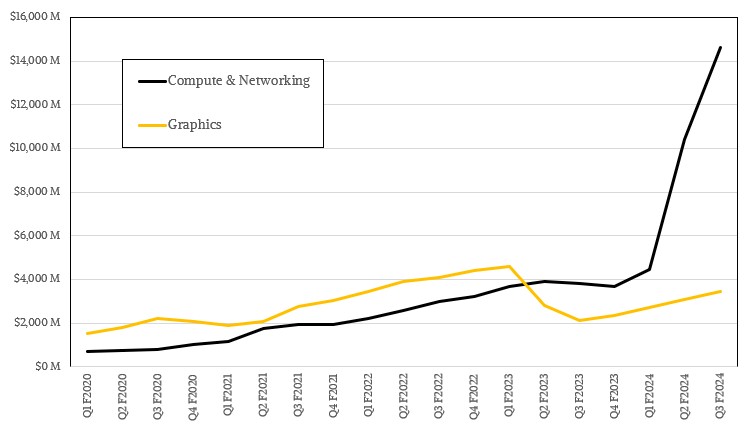

Nvidia’s Datacenter division, and probably more precisely its Compute & Networking group, are the main drivers of Nvidia’s monumental growth.

In any normal quarter from say a few years ago, when compute/networking and graphics were running at about the same revenue rate, if Nvidia reported its Graphics group had sales of $3.48 billion, up 64.3 percent, everyone would be sighing in relief that the gamers and professional creators of the world were increasing their spending after a steep decline in calendar 2022 basically cut this business in half and allowed the Compute & Networking business to outgrow it and surpass it for the first time – and thanks in no small part to the acquisition of Mellanox Technologies several years prior.

Had the generative AI boom not happened at the end of 2022, we might be talking about how the HPC market was in a boom cycle and Nvidia is getting its share of that action and various kinds of machine learning were progressing along, giving Nvidia’s datacenter business a long runway to be something that might someday rival the likes of an IBM in its System/360 mainframe heyday in the 1960s and the 1970s.

If the Nvidia Hopper and Blackwell HGX platforms do not rival the System/360 and the System/370 in terms of their relative importance in the IT budget and the utter transformation of the economy, then nothing ever will. The Unix revolution promoted openness of a sort and application portability as well as price performance, and the X86 and Linux revolution extended this even further. But this is a completely new computing paradigm that just so happens to need an enormous amount of compute, networking, and storage to work – even as imperfectly as it does.

In the third quarter of fiscal 2024 ended in October, the Compute & Networking group had $14.64 billion in sales, up by a factor of 3.83X compared to the year ago quarter, and the Datacenter division (which is not a perfect overlay because some of Nvidia’s compute and networking products are sold for uses outside of the datacenter) had sales of $14.51 billion, up by 3.78X year on year.

The Datacenter division presented 80.1 percent of the company’s total sales in the quarter, a record high and a condition that probably will not change for many years. And thus, no one really cares right now that the Gaming division had sales of $2.86 billion, up 81.4 percent, and that Profession Visualization had sales of $416 million, up 108 percent. The Automotive division, at $261 million, might be important long term, but right now its 4 percent growth rate and its diminutive size rates no more than this passing comment. Someday, this will be a multi-billion business for Nvidia, and it will counterbalance some hardship in the datacenter business, which will eventually level out and, depending on how well Nvidia keeps innovating and how poorly the competition might, may even decline. It is hard to see that right now, but it happened to IBM, it happened to Sun Microsystems, and it happened to Intel – all of which who lost their maniacally focused founders and who abdicated their responsibilities to innovation over the long haul for the sake of pleasing Wall Street each and every quarter.

Perhaps Nvidia can be different. It all depends on co-founder and chief executive officer Jensen Huang, who seems to be cut of the same kind of cloth as the founders who created their own mountains in prior IT orogenies. But all founders eventually retire. One way or the other. Given the vitality and relative youth of Huang, Nvidia has at least a decade of raising hell, and perhaps another decade of raking in money through sheer inertia after that. So Wall Street, relax.

In the quarter, cloud service providers – what we call cloud builders – comprised about half of Datacenter division sales, and the other half came from consumer Internet companies – what we call hyperscalers – and enterprise customers. (Which also includes governments and academia.) We wish that Nvidia would break enterprise free of the hyperscalers and cloud builders, and we wonder how Nvidia is allocating sales to companies like Microsoft, Google, and even Amazon Web Services to a certain extent who offer cloud services and who also have their own applications running in the cloud either for free with ad support or for a fee. Suffice it to say, hyperscalers and cloud builders account for far more than 50 percent of Nvidia’s Datacenter sales, which is a lot more than the 50 percent or so of the IT market at large. Nvidia is heavily concentrated among the upper echelon of compute among the hyperscalers and cloud builders.

But that will change as generative AI goes mainstream in the enterprise. This is just the first phase of mountain building, and if Nvidia eventually reflects the market at large, and companies and countries want to build their own “sovereign AI clouds,” as Huang called them during a call with Wall Street going over the numbers. Nvidia never did believe that companies would move everything to the cloud builders, and its own continued investment in homegrown supercomputers and sales of similar machinery to the HPC centers of the world shows it.

When we put what Nvidia said on the call through our spreadsheet magic, we reckon that the Compute part of Compute & Networking accounted for $11.94 billion in sales, up 4.24X compared to a year ago, and the Networking part accounted for $2.58 billion in sales, up by 2.55X year on year. Huang said that InfiniBand networking sales rose by a factor of 5X in the quarter, and we think that works out to $2.14 billion and represents 83.1 percent of all networking. The Ethernet/Other portion of the Networking business accounted for $435 million, and declined by 25.2 percent. But again, as the enterprise buildout of generative AI infrastructure continues, we expect for Spectrum-X Ethernet sales for AI clusters to grow, so the ups and downs for Ethernet are more about the lumpiness of sales of these products to hyperscalers, cloud builders, telcos, and other service providers than anything else. The point is that the networking business in the aggregate is now a $10 billion business in its own right and is about eight times bigger than when Nvidia bought it.

There is much concern about export controls for GPUs that are sold into China, which accounted for somewhere between 20 percent and 25 percent of datacenter revenue in the past few quarters. This business “will decline significantly” in the coming quarter as the US government locks it down to try to blunt the rise of AI and HPC in China, but there is so much demand in other regions where Nvidia can sell its GPUs right now that it will not impact sales. Nvidia can still sell a lot more GPUs than it can make, so it is hard to see what all of the fuss is about. In the longest of runs, Nvidia does not want an indigenous GPU market to arise in China, but the US government is ensuring it. In the longest of runs, Nvidia may therefore see greater competition from Chinese manufacturers who can sell globally whereas Nvidia, AMD, and Intel cannot sell into China to compete with them, or better still, keep them from evolving and competing in the first place.

No matter what, China will have AI and HPC superpowers because it is an economic superpower, and US foreign policy doesn’t seem to understand that.

So that leaves the big question: Can Nvidia grow its datacenter business in 2024 and 2025? (We are talking calendar years here.)

“Absolutely believe the datacenter can grow through 2025,” Huang said when asked this question on the call. “And there are, of course, several reasons for that. We are expanding our supply quite significantly. We have already one of the broadest and largest and most capable supply chains in the world.” Huang went on to talk about the 35,000 different components in the HGX system board, only eight of which are the GH100 GPUs themselves, and then he said this: “We’re seeing the waves of generative AI starting from the start-ups and CSPs, moving to consumer Internet companies, moving to enterprise software platforms, moving to enterprise companies. And then ultimately, one of the areas that you guys have seen us spend a lot of energy on has to do with industrial generative AI. This is where Nvidia AI and Nvidia Omniverse come together and that is a really, really exciting work. And so I think we’re at the beginning of an across-the-board industrial transition to generative AI based on accelerated computing. This is going to affect every company, every industry, every country.”

Depending on where you want to put that growth at for the final quarter of fiscal 2024, Nvidia should end up raking in somewhere between $42 billion and $46 billion in revenues from the Datacenter division. It is not unreasonable, given the demand for generative AI compute engines and networking, to expect revenues of $60 billion to $70 billion in fiscal 2025, with things balancing out somewhat with sales on a more normal growth trajectory to hit $80 billion to $90 billion in fiscal 2026 and for Nvidia to possibly break through $100 billion in fiscal 2027.

Double that number to get the system revenue those Nvidia compute and networking components will drive, and then multiply half of that system number by 2.5X to get the markup as cloud builders peddle that system by the hour over three years, now divide it by out for one year and that is $185 billion in consumption for AI compute and networking in 2027 alone. And Nvidia will probably still represent 65 percent to 70 percent of the market, which will be just short of $300 billion or so of compute and networking consumption.

Right now, if you haven’t been thinking about how you might stretch the lifetime of your existing server fleets to save yourself some IT budget to reinvest in generative AI, you must be the only one. Because that is what we see going on in the market. But over the long haul, traditional workloads will have to have platform upgrades and AI will not be a rushed science project, but a new platform with enormous budgets.

There is plenty of money yet to be made here. And we haven’t even talked about Nvidia’s potential for server and client CPUs. . . .

The Next Platform cost of GPU-accelerated systems inference rush to find gold mountains of corporate data Nvidia Enormous Potential For Generative AI