In This Post

- 1 Diving Deep into the AI Value Chain

- 2

- 3 Dell Technologies Storage Advancements Accelerate AI and Generative AI Strategies:

- 4 Huawei Releases Range of Innovative Practices to Help Carriers Build:

- 5 Dell Technologies Boosts AI Performance with Advanced Data Storage and:

- 6 The Vast Potential For Storage In A Compute Crazed AI World:

- 7

- 8 How Vast is unifying data storage, database and AI compute for global access:

- 9 AI and Data Storage: Reducing Costs and Improving Scalability:

- 10 DAOS- Intel’s Open Source baby for Super Computing and AI:

- 11 PeakAIO CEO: Less is more to get the most out of AI storage:

- 12 NAS devices for enterprises in 2024 and buying factors:

- 13 Compare block vs. file vs. object storage differences, uses:

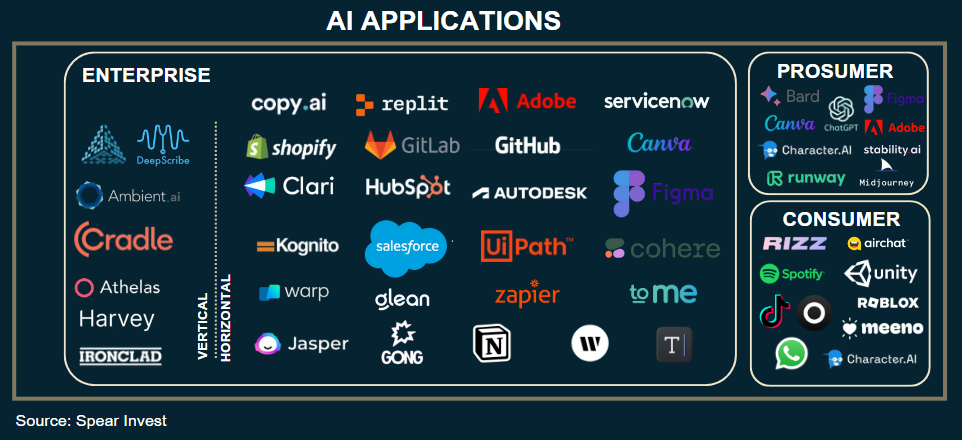

Diving Deep into the AI Value Chain

“Diving Deep into the AI Value Chain” is a report by McKinsey Digital that explores the opportunities and challenges of generative AI, a technology that can create new and original content from data. The report provides an overview of the generative AI value chain, which consists of four main components: the AI tool, the training data, the production data, and the AI output. The report also discusses the different actors, business models, and use cases in the generative AI ecosystem, as well as the ethical, legal, and social implications of the technology. The report aims to help investors and business leaders understand the potential and risks of generative AI and identify the best ways to leverage it for innovation and value creation

Dell Technologies Storage Advancements Accelerate AI and Generative AI Strategies:

Dell PowerScale, (a data platform that unifies data storage, database, and compute engine services), is delivering faster and better AI and GenAI outcomes for customers and partners, such as Lawrence Livermore National Laboratory, Texas Advanced Computing Center, and Nvidia.

Huawei Releases Range of Innovative Practices to Help Carriers Build:

Huawei’s AI storage solution that uses cutting-edge technologies to accelerate data preprocessing and power the LLM training that houses trillions of parameters.

Dell Technologies Boosts AI Performance with Advanced Data Storage and:

Dell Technologies’ new enterprise data storage advancements and validation with the NVIDIA DGX SuperPOD AI infrastructure, which aim to help customers achieve faster AI and GenAI performance.

The Vast Potential For Storage In A Compute Crazed AI World:

Vast Data, a startup that provides a data platform for enterprises and cloud service providers, is attracting customers and investors with its innovative solution that unifies data storage, database, and compute engine services. The article highlights the features and benefits of Vast Data’s platform, such as its ability to support high-performance data analytics and AI workloads, its scalability and cost-efficiency, and its integration with next-generation NVM technologies. The article also mentions some of the customers and partners of Vast Data, such as Lawrence Livermore National Laboratory, Texas Advanced Computing Center, and Nvidia.

The success and potential of Vast Data, a startup that provides a new kind of storage platform for large-scale, high-performance workloads such as HPC and AI. Vast Data has raised $381 million in total funding and has a valuation of $9.1 billion, making it a likely candidate for a blockbuster IPO in 2024 or 2025. Vast Data’s storage platform uses flash storage and the NFS protocol to deliver fast, scalable, and cost-effective data access for customers such as Lawrence Livermore National Laboratory, Texas Advanced Computing Center, and several AI cloud builders. Vast Data also blurs the lines between storage and databases by allowing customers to run queries and analytics directly on the storage layer, without having to copy or move data. The article argues that Vast Data has created a unique and innovative solution for the data-intensive challenges of modern workloads, and that it has a bright future ahead.

How Vast is unifying data storage, database and AI compute for global access:

Interview with Vaughn Stewart, vice president of systems engineering at Vast Data, who explains how the company is revolutionizing data management and access for AI and GPU-powered workloads. Stewart describes the advantages of Vast Data’s platform over traditional hyperscalers and legacy enterprise infrastructure, such as its performance, simplicity, and cost-savings. He also shares some of the use cases and customers of Vast Data, such as CoreWeave, G42, and Lambda, and the future plans and vision of the company.

AI and Data Storage: Reducing Costs and Improving Scalability:

The impact of AI on data storage and how it can help businesses overcome the challenges of managing and processing large and complex data sets. The evolution of data storage and the rise of AI-driven solutions, such as intelligent storage systems, data compression and deduplication, and data tiering and caching. Also discusses the benefits of AI for data storage, such as reducing costs, improving scalability, enhancing performance, and ensuring data quality and security.

DAOS- Intel’s Open Source baby for Super Computing and AI:

This article introduces DAOS, a distributed asynchronous object storage system developed by Intel and other collaborators, that is designed for super computing and AI applications. The article explains the features and benefits of DAOS, such as its high bandwidth, low latency, and high IOPS, its support for fine-grained data access and manipulation, and its compatibility with next-generation NVM technologies. The article also provides some examples of how DAOS is being used and adopted by various organizations and projects, such as the Exascale Computing Project, the HDF Group, and the TensorFlow-IO.

Datacenter Infrastructure Report Card, Q3 2023

Why is making artificial saliva so hard?

Tachyum 8 AI Zettaflops Blueprint to Solve OpenAI Capacity Limitation

A Cheatsheet for nature.com OpenSearch

Technology News, Trends – December 2023